I am not in the habit of commenting on ephemeral events, but this was brought to my attention by interested parties in a decidedly snarky fashion which obliges me to respond.

Briefly, Neil Hall introduced the “Kardashian index” to quantify the discrepancy between the social media profile of scientists (measured by the number of Twitter followers) and their publication record (measured by citation count). The index is designed to identify “Kardashians” in science – people who are more well-known than merited by their scholarly contributions to the field.

Of course, the actual Kardashians are entirely indefensible, as they – by their very existence – take scarce and valuable attention away from more worthy issues that are in urgent need of debate. Perhaps this is their systemic purpose. So I won’t attempt a hopeless defense. However, the validity of the allegations implicit in creating such an index for science is questionable at best.

To be sure, Dr. Hall raises an important issue. It is true that there are a lot of self-important gasbags high on the “Kardashian” list who have stopped contributing to science decades ago, people who are essentially using their scientific soapbox only to bloviate about ideological pet interests they are ill equipped to fully comprehend.

Moreover, the Kardashian effect is quite real. While I do not believe that anyone on the list released a sex tape to gain attention, there is now a decided Matthew effect in place where attention begets more attention, whether it was begotten in a legitimate fashion or not. The victims of this regrettable process are the hordes of nameless and faceless graduate students and postdocs who toil in obscurity, making countless personal sacrifices on a daily basis and with scant hope of ever being recognized for their efforts.

Yet, introducing a “Kardashian index” betrays several profound misunderstandings that – if spread – will likely do more harm than good.

First, it exposes a fundamental misunderstanding of the nature of Twitter itself. Celebrity is not the issue here. I follow thousands of accounts on Twitter. Not all of them are even human. I know almost none personally and would be hard pressed to recognize more than a few of them. Put differently, I’m not following them because of who they are, but largely because of what they have to say. If they were actual Kardashians, this would be a no-brainer, as they have nothing valuable to say. The message (information), not the person (celebrity) is at the heart of (at least my) following behavior on Twitter.

Second, there are many ways for the modern academic to make a meaningful contribution. Outreach comes to mind. What is so commendable about narrowly advancing one’s own agenda by publishing essentially the same paper 400 times while taking the funding that fuels this research and is provided by the population at large completely for granted? In an age of scarce economic resources, patience for this kind of approach is wearing thin and outreach efforts should be applauded, not marginalized. There are already far too few incentives for outreach efforts in academia, meaning that the people who are doing outreach despite the lack of extrinsic rewards will tend to be those who are intrinsically motivated to do so. Another “Kardashian” high on the list comes to mind – Neil deGrasse Tyson. So what if he made few (if any) original contributions to science itself – he certainly inspires lots of those who are inclined to be so inspired. Why is that not a valuable contribution to the scientific enterprise more broadly conceived? Of course, it is worth noting that this should be done with integrity and class.

The scientific “Kardashians”. As far as I know, none of them released a sex tape to garner initial fame

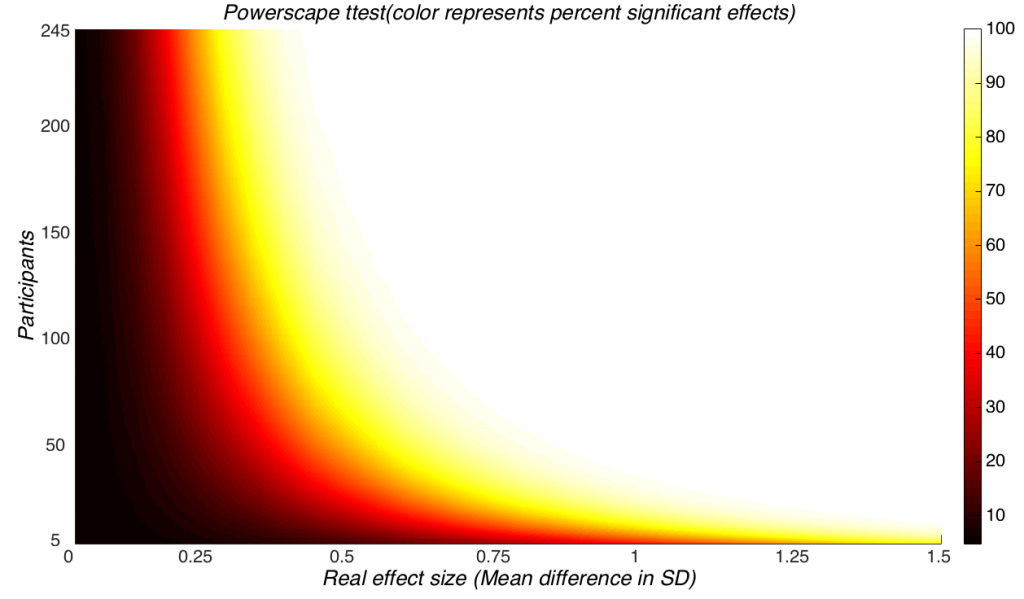

Third, if there are many ways to make a contribution to science, why privilege one of them? Doing so would be justified if there was some kind of unassailable gold standard by which one could measure scientific contributions. But to suggest that mere citation counts could serve as such a standard in a time of increasing awareness of serious problems inherent to the classic peer review system (to name just a few: it is easy to game, there are rampant replication issues, perverted incentives, all of which are increasingly reflected in ever rising retraction numbers) is as naive as it is irresponsible.

Pyrite or fool’s gold. This is a metaphor. Only a fool would take citation counts as a gold standard of scientific merit. It does happen, as fools abound – there is never a shortage of those…

All of these concerns aside, this matter highlights the issue of the link between contributions to society (“achievement”) and recognition by society (“fame”). The implication of the “Kardashian index” is that this link is particularly loose if one uses twitter followers as a metric of fame and citations as a measure of contribution. Of course, we know that this is absurd. We don’t have to speculate – serious scholars like Mikhail Simkin have made it their life’s work to elucidate the link between achievement and fame. In every field Simkin considered to date (and by every metric he looked at), this link is tenuous at best. Importantly, it is particularly loose for the case of citations. As a matter of fact, the distribution of citation counts is *entirely consistent* with luck alone, due to the fact that papers are often not read, despite being cited. For fields where such data is available, the “best” papers are rarely the most cited papers. Put differently, citations are not a suitable proxy of achievement, as they are also just another proxy for fame. This is not entirely without irony, but – to put it frankly – the “Kardashian index” seems to be wholly without any conceptual foundation whatsoever in light of this research.

In fact, most papers are never cited, whereas a few are cited hundreds of thousands of times. Taking the Kardashian index seriously implies that most papers are completely worthless. Perhaps this is so, but maybe most people are just bad at playing this particular self-promotion game? I’m not sure if there is an inequality index akin to GINI for citations, but I would venture to guess that it would be staggering and that the top 1% of cited papers would easily account for more citations than the other 99%.

Put differently: Is everyone trying to be a Kardashian, on Twitter or off it, but some people are just better or luckier in the attempt?

I find this account quite plausible, but what does any of this have to do with scientific contributions?

As already pointed out, attention (or fame) matches real life contributions rarely, if ever. This is mostly due to the fact that real life contributions are hard to measure. Science is no exception. Citation counts are likely a better proxy for fame than for genuine contributions. If anything – the “Kardashian index” relates two different kinds of fame, fame inside a particular field of scientific inquiry relative to fame in the more general population. But who is to say that one kind of fame is better than another kind of fame? If one wants to save the “Kardashian index”, it would make sense to rebrand its inverse as the “insurality index” or “obscurity index” – do you get your message out only to people in your field or are you able to reach people more generally?

There are many ways for scientists to make a contribution. Not all of them are directly related to original research. Teaching comes to mind, reviewing too. The job of a modern academic is complex. One can do all of these things with varying degrees of seriousness. The reason why this became an issue in the first place is because outreach efforts (proxy: Twitter) and publications (proxy: Citations) can be easily quantified. That doesn’t mean that it is valid to do so or that one is necessarily better than the other. A research paper is a claim that is hopefully backed up by good data created by original research. But as the replication crisis shows, many of these claims turn out to be unsubstantiated, born from methodical issues, not seminal insights. To be sure, academia would be well advised to develop better metrics of performance. In general, the evaluation of academic performance is often surprisingly unscientific. From papers to citations to evaluations, it is all remarkably unsophisticated at this point in time.

Ultimately, it is all about the relevance of one’s contribution. But trying to discredit an increasingly important form of academic activity (outreach), by shaming those engaged in it, is probably uncalled for. We need more interactions between scientists and the general public, not less. To be sure, a lot of what is called “outreach” today is more leech than outreach, but the real thing does exist. As for the relevance of citations: Just because someone managed to create a citation cartel where everyone in it cites each other, that doesn’t mean anyone outside of the cartel cares. Calling something an impact factor doesn’t make it so. To be clear, there is nothing wrong with deciding to focus on original research. As a matter of fact, our continued progress as a species depends on it, if done right. But lashing out at others who decide to make a different choice does invite responses that will tend to point out that the situation is akin to dinosaurs celebrating their impending fossil status. At any rate, evolution will continue regardless. Wisdom consists in large part of not being trapped by one’s own reward history when the contingencies change. But good luck staying relevant in the 21st century without a social media strategy.

As everyone knows, Mark Twain observed that it is inadvisable to pick fights with people who buy ink by the barrel. Is the modern day equivalent that it is unwise trying to shame people who buy followers by the bushel?

PS: Of course, all of this bickering about who is more famous, who contributed more and who is more deserving of the fame is somewhat unbecoming and indicative of a deeper problem. As far as I can tell, the root cause for this problem is that society does not scale well in this regard. Historically, humans lived in small groups of under 150 individuals. In such a group, it is trivial to keep track of who did what and how much that contributed to the welfare of the group. There is no question that the circuitry that handles this tallying was not designed to deal with a group size of millions – or even billions (if one were to adopt a global stance). It is not trivial at all to ascertain who contributed something meaningful and who got away with something with a group size that big. Unless we find a way to handle these attribution problems (modern society obviously allows for plenty of untrammeled freeloading) in general – perhaps with further advances in technology that allow more advanced forms of social organization – this issue will keep coming up, unresolved. If you have any suggestions how to solve this or a perspective of your own, please don’t refrain from commenting below.

PPS: Of course, there is an even more general issue behind all of this, namely the relationship between contribution and reward. No society is great at making this relationship perfect. Interested parties can exploit these misallocations by gaming the system. The questions is what one should do about this and how one ought to perfect the system. Obviously, one way to deal with this is to shame the people who game the system, but I worry that the false-positive (and miss!) rate might be quite high. If so, that doesn’t really improve the overall justice in the system. A better way might be to make the system more robust against this kind of abuse. Ultimately the best long-term policy is virtue.

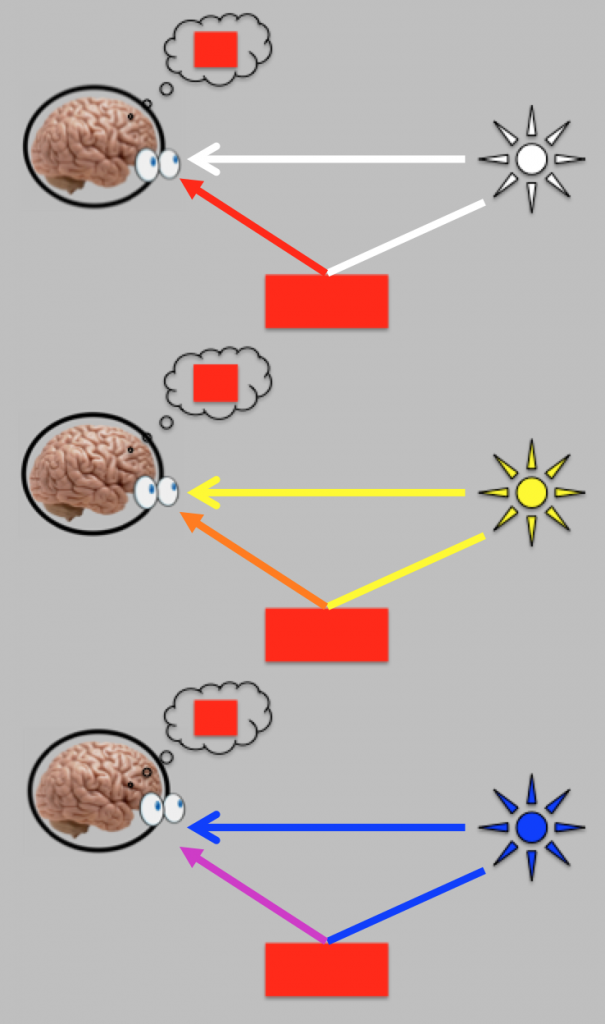

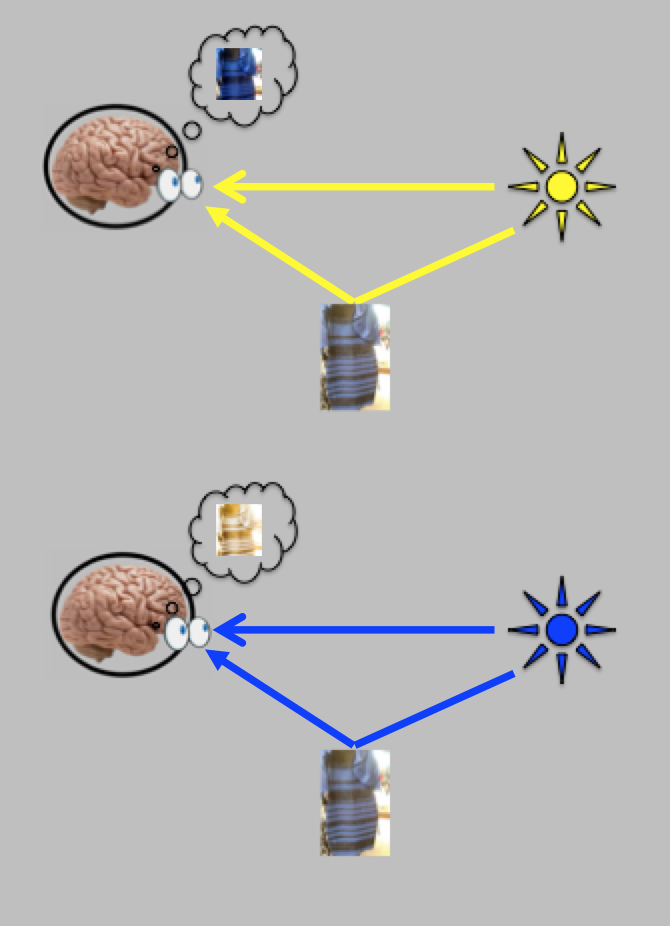

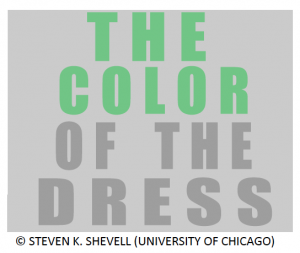

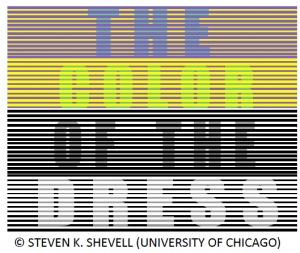

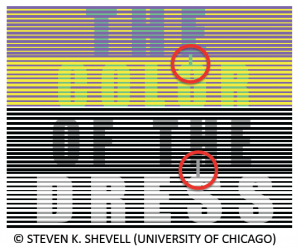

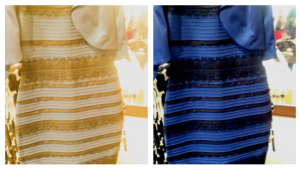

When #thedress first came out in February 2015, vision scientists had plenty of ideas why some people might be seeing it differently than others, but no one knew for sure. Now we have some evidence as to what might be going on. The illumination source in the original image of the dress is unclear. It is unclear whether the image was taken in daylight or artificial light, and if the light comes from above or behind. If things are unclear, people assume that it was illuminated with the light that they have seen more often in the past. In general, the human visual system has to take the color of the illumination into account when determining the color of objects. This is called color

When #thedress first came out in February 2015, vision scientists had plenty of ideas why some people might be seeing it differently than others, but no one knew for sure. Now we have some evidence as to what might be going on. The illumination source in the original image of the dress is unclear. It is unclear whether the image was taken in daylight or artificial light, and if the light comes from above or behind. If things are unclear, people assume that it was illuminated with the light that they have seen more often in the past. In general, the human visual system has to take the color of the illumination into account when determining the color of objects. This is called color

Tracking the diversity of popular music since 1940

This is a rather straightforward post. Our lab is doing research on music taste and one of our projects involves sampling songs from the Billboard Hot 100. It tracks the singles that made it to the #1 in the charts in the US (and for how long they were on top), going back to 1940.

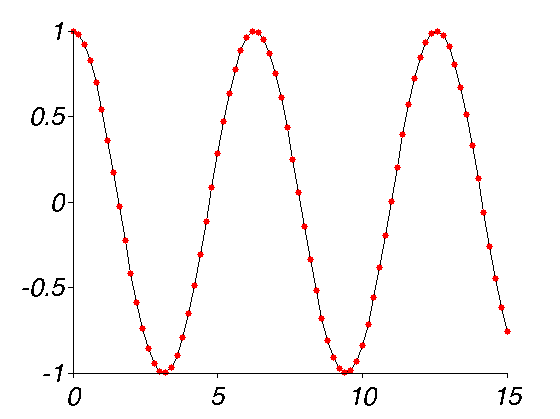

Working on this together with my students Stephen Spivack and Sara Philibotte, we couldn’t fail to notice a distinct pattern in the diversity of music titles over time. See for yourself:

Musical diversity over time. Note that the data was smoothed by a 3-year moving average. A value of just above 10 in the early 40s means that an average song was on top of the charts for over 4 weeks in a row. The peak levels in the mid-70s mean that the average song was only on top of the charts for little more than a week during that period.

Basically, diversity ramped up soon after the introduction of the BillBoard charts, and then had distinct peaks in the mid-1960s, mid-1970s and late 1980s. The late 1990s peak is already much diminished, ushering in the current era of the unquestioned dominance of Taylor Swift, Katy Perry, Rihanna and the like. Perhaps this flowering of peak diversity in the 1970s, 1980s and 1990s accounts for the distinct sound that we associate with these decades?

Now, it would of course be interesting to see what drives this development. Perhaps generational or cohort effects involving the proportion of youths in the population at a given time?

Note 1: “Diversity” as literally “number of different songs over a given time period”. The temporal difference density. It is quite possible that these “different” songs are actually quite similar, but there is no clear metric by which to compare songs or a canonical space in which to compare them. Pandora has data on this, but they are proprietary. So if you prefer “annual turnover rate”, it would probably be more precise.

Note 2: My working hypothesis as to what drives these dynamics (that are notable phase-locked to decade) is some kind of overexposure effect. A new style comes about that coalesces at some point. Then, a dominant player emerges, until people get bored of the entire style, which starts the cycle afresh. A paradigmatic case as to how decade-specific the popularity of music is would be Phil Collins.

Note 3: Similarity of music might be hard to quantify objectively. Lots of things sound similar to each other, e.g. the background beat/subsound in Blank space and the background beat/subsound in the Limitless soundtrack.