Our perception corresponds to an idiosyncratic model of reality, not reality itself.

This is easy to forget, as we all share a common outside environment in the form of external reality and process it with a cognitive apparatus that has been honed in billions of years to work properly. Yet, this is a profound truth.

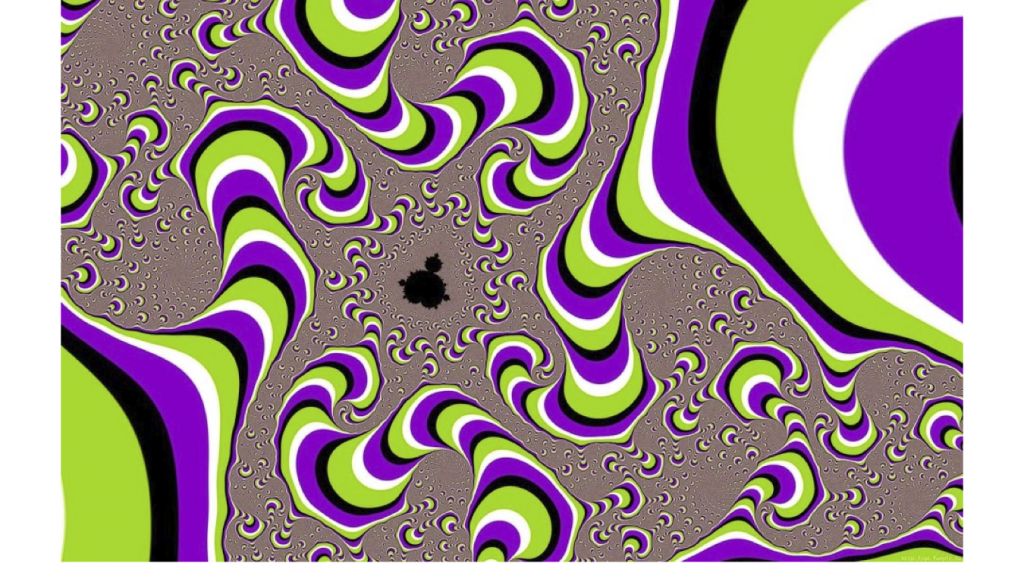

Do you see motion? If so, it was created by your brain. There is nothing moving in the image in the outside world. But psychologists now understand the contrast gradients that can be used to make the brain assume the presence of motion.

It is important to recognize that the perceptual model does not necessarily correspond to objective reality. This is not a failing of the system. On the contrary, as it almost always has to work with incomplete information at the front end, gaps in evolutionary relevant information are filled in from other sources, be they other modalities, correlations to other cues as well as correlations that have been learned during ontogeny and phylogeny. Put differently, it is more adaptive for organisms to make educated guesses about what is out there than to take a strictly agnostic position if information is missing.

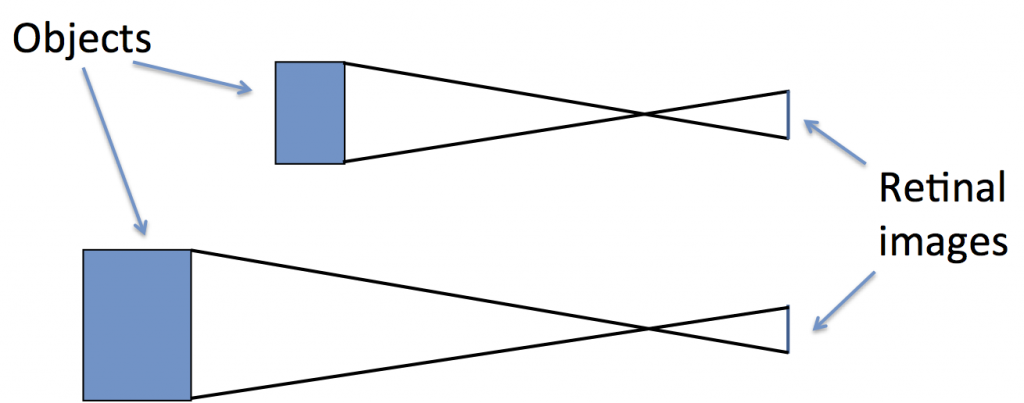

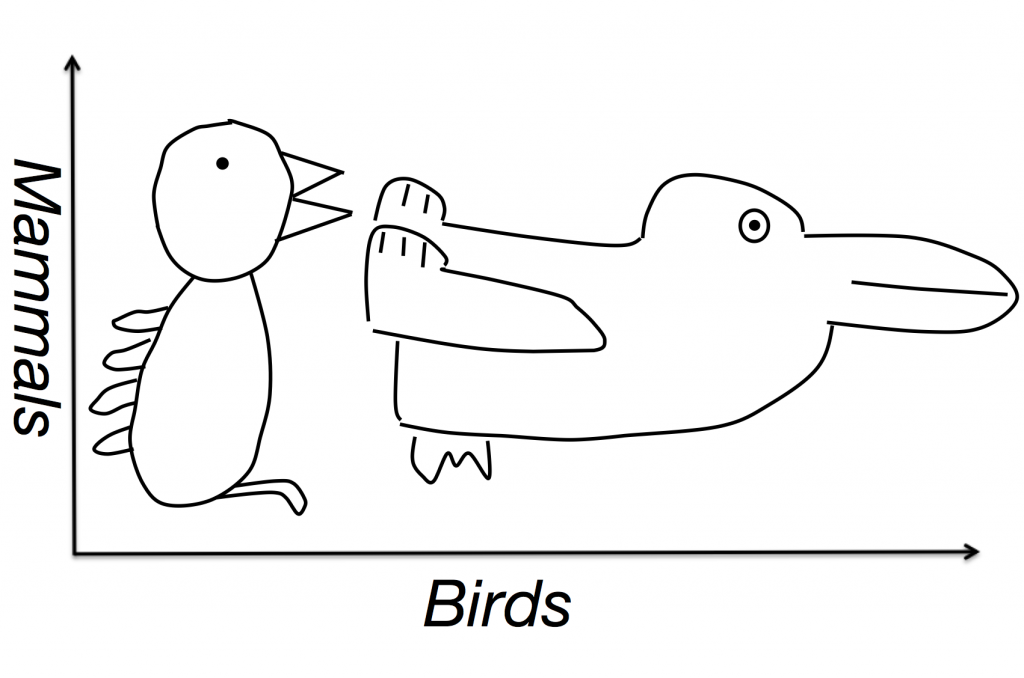

The perception of depth information is a good example of this. The spatial outside world is (at least) three-dimensional, yet the receptors that transduce the physical energy from photons to electrical energy that the brain can process are arranged in a two-dimensional sheet at the back of the eye, as a part of the retina. In other words, an entire dimension of information – how far away things are – is lost up front. Strictly speaking, the brain has no genuine distance information available whatsoever. Yet, we see distance just fine. Why? Because this is a dimension that the brain can ill afford to lose in terms of survival and reproduction. It is critical to know how far away predator and prey is, to say nothing of all other kinds of objects, if only not to bump into them. So what is the brain to do? In short, it uses a great many tricks to recover depth information from two-dimensional images. Most of these “depth cues” are now known and used by artists to make perfectly flat images look like a scene with great depth or even make movies look three-dimensional. Strictly speaking, this constitutes an error of the system, as the images are really two-dimensional, but this is an adaptive kind of error and given how complicated the problem is – operating with such little available information – even the best guess can still be a wrong one.

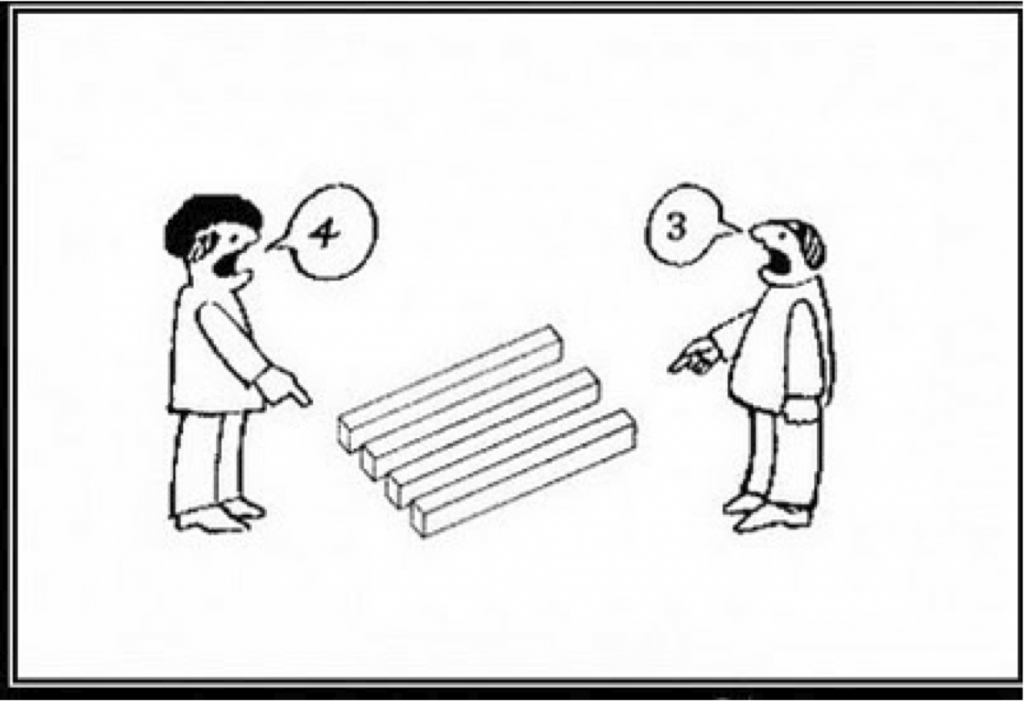

A bigger object that is father away takes up the same space on the retina as a smaller one that is closer. How – then – is the brain supposed to know which one is closer and farther? This information is not contained in the size of the retinal image traced by the object itself – as it is a projection – and has to be reconstructed by the brain.

The recovery of distance information illustrates the principle nicely, but it is far from the only case. Other perceptual aspects like color, shape or motion are derived in a similar fashion. The brain constructs its model almost always on the basis of incomplete information. There are many processing steps that go into the construction of the model, and most of them are unconscious, only the final percept is conscious. Many brain areas are involved in the construction of this model (in primates, between 30 and 50% of the brain).

One thing that is remarkable about all of this is that the brain fully commits to a particular interpretation of the available information at any given time. Even if the perceptual input is severely degraded, the brain rarely hedges, if the result is compatible with a coherent model of the world. The end-user is not informed which parts of the percept correspond to relatively “hard” information and which were “filled in”, but really correspond to little more than educated guesses about what is out there. This seems to be a general processing principle of the brain. Moreover, if the available information is inherently ambiguous and compatible with several different interpretations, it does not flag this fact. Instead, second thoughts manifest as a (sometimes rapid) switching between different interpretations, yet a meta-perspective is not taken by the perceptual system. A particular interpretation of the available information usually comes at the exclusion of all others, if only for a time. Interpretations switch, they don’t blend.

Arguably, this necessarily has to be so, as this system provides an interpretation of the outside world not for our viewing pleasure, but to be actionable, i.e. to guide and improve motor action. In the natural world, both indecision and dithering incur serious survival disadvantages. To be sure, flip-flopping also is not without its perils, but fully bistable and inherently ambiguous stimulus configurations are probably rare outside of the laboratory of the experimenter (these displays are designed specifically to probe the perceptual apparatus. Doing this in a fashion that doesn’t bias the stimuli one way or the other is not easy), so evolution probably didn’t have to make allowances for that.

To summarize, the brain overcommits to a particular interpretation of the available evidence in a way that is often not entirely warranted by the strength of the evidence itself. It does so for a good reason – survival – but it is nevertheless doing it. This has social implications.

Given the slightly disparate – if incomplete – information, individual differences in brain structure and function as well as a different history of experience accumulation (which in itself colors future perception), it is to be *expected* that different people reconstruct the world differently, sincerely but quite literally seeing things differently.

The construction of a perceptual model of the world involves so many steps that it is not surprising that the end-result can be idiosyncratic. What is surprising is that we have not yet fully wizened up to this fact. The fact that it is happening is not a secret; modern psychology and neuroscience is pretty unequivocal that this is basically how perception works. Most of us also know about this from personal experience. Visit any discussion board on any issue and you will see this effect in action. Every individual feels strongly (and often sincerely) that they are right – and by implication that everyone who is at variance with their stance is wrong. This is very dangerous, as we are closing ourselves off to other viable positions. The other side is – at best – misinformed, if not outright malicious or disingenuous. In addition to the illusory certainty of perception, there is often an immediate emotional reaction to things we disagree with. A righteous anger that has cost many a FB friendship and triggered quite a few outrage storms on twitter, with no end in sight.

The traditional form to resolve this kind of dispute is by resorting to violence. In the modern world, this kind of conflict resolution is frowned upon, partly due to the domesticating effects of civilization and partly due to effectiveness of our weapons, which make this path a little too scary these days. So what most people now do is to engage in a kind of verbal sparring as a substitute for frank violence. But this is often rather ineffective as few people can be convinced in that way. There is pretty good evidence that by talking to the other person, we are just talking to their PR department, spinning a story in any way possible. So most of these things devolve into attempts at silencing the other side by shaming. All of these tactics are highly divisive. Popular goodwill is a commons and it is easily polluted. Now, it might be possible that social interactions simply don’t scale. Small tribes need to find a consensual solution in order to foster coherent action, but large and diverse societies like ours don’t allow this easily.

A different approach opens up if we take the insights from perceptual psychology and neuroscience seriously. If we acknowledge that our brains have a tendency to construct highly plausible but ultimately overconfident models based on scant and in a large society necessarily disparate models, dissent is to be expected. It should not (necessarily) be interpreted as a personal attack or slight. Put differently, benign dissent is the default mode that should be expected from the structure of our cognitive apparatus. It is not necessary to invoke malice. Nor is it necessary to invoke ignorance. The other side might well be less informed. But it is also plausible that they simple had different experiences (and a different cognitive apparatus to process them).

Once this is acknowledged, there are two ways to go about building common ground. The first one would be to – instead of arguing – figure out what kind of evidence the other side is missing and to provide it, if possible. If that is not possible, it might still be worthwhile to point out a different possible interpretation of the evidence available to both.

Of course, this is a particular challenge online, as many statements are not made to have a genuine discourse, but rather for signaling purposes, showing one’s tribe how good of a person one is by toeing the tribal line. Showing tribal allegiance is easy. It also typically doesn’t cost an individual anything, nor does it usually achieve anything. But the cost to the commons is big, namely tribalism. This is indeed a tragedy as we all do have to share this planet together, like it or not. As online discourse matures, it is my sincere hope that this kind of fruitless and toxic pursuit will be flagged as an empty attempt at signaling, discouraging individuals who engage in it from doing so.

There is a hopeful note on which to end on: Taking the lessons of perceptual research seriously, we can transcend the tribalism our ancestors used to get to this level, but that now prevents society from advancing further. Once we recognize that it is tribalism that is holding us back, we can use the different perspectives afforded by different people using different brains to get a higher order imaging of reality than would be possible by any individual brain (as any individual brain necessarily has to take a perspective, see above). Being able to routinely do this kind of simultaneous multi-perspective imaging of reality would be the mark of a truly advanced society. Perceptual research can pave the way. If its lessons are heeded.

You make the point that our finely honed visual system creates a coherent picture from fragmentary information and in the process, sometimes gets it wrong. You then use that as an analogy for the way we perceive our social world, quickly constructing cognitive maps from partial information but in this case we get it wrong, more often than not.

We have had millions of years to perfect our visual mapping system but only 50,000 years or so to make a workable cognitive mapping system that uses linguistic input instead of visual input. So we shouldn’t be surprised that it is so imperfect.

As you say, the cost is tribalism as we take refuge from the mis-perceptions by seeking the confirmation those who share our own perceptions.

The solution, you say, is

‘Once we recognize that it is tribalism that is holding us back, we can use the different perspectives afforded by different people using different brains to get a higher order imaging of reality than would be possible by any individual brain (as any individual brain necessarily has to take a perspective, see above). Being able to routinely do this kind of simultaneous multi-perspective imaging of reality would be the mark of a truly advanced society.‘

I agree that it would be the mark of a truly advanced society. In fact it would be quite remarkable, if you will pardon the pun.

But, how do we achieve this? Each of us is locked up in a bony enclosure that constitutes the entire world to us. We have a limited theory of mind that recognises, to some extent, other minds. We use speech in two channels, to signal our needs on one level and to negotiate our needs on another level. But this is all about our own needs, it is narcissistic and solipsist.

I suggest the real problem is not so much our failure to perceive the needs of others as the failure to value the needs of others. This makes it a moral problem and not a technical problem. When we learn to value the needs of others we pay more attention to their signalling and negotiation. We then form a better picture of the social world around us and make more useful adjustments to it.

This then is the problem, a moral problem, learning to value the needs of others and thus learning to pay close attention to what it is they are signalling and trying to negotiate. The consumer driven world of hedonism and narcissism, epitomized by the ‘selfie’ is leading us in the opposite direction, to greater self absorption and thus increasing tribalism.

The solution was already known 2000 years ago.

“34 A new command I give you: Love one another. As I have loved you, so you must love one another.” (John 13:34)

On our personal time scale we are slow learners but it might turn out that on evolutionary time scales we are lightning fast learners.

You described the fascinating phenomenon of the perceptual system always delivering certainty. You then suggested that the reason for certainty in reasoning and opinions on politics and such may have the same root cause. That’s a very interesting hypothesis! One difference is that the perceptual system can (in what may admittedly be rare cases) oscillate 100% between incompatible alternatives. But for cognitive beliefs, it seems that never happens- why not? What is different about the cognitive system that prevents that?

This is a very good point. Here is my intuition: Bistable perceptual phenomena (that flip back and forth) are usually characterized by extreme sparsity, e.g. a bunch of dots moving on a path where velocity is sinusoidally modulated is compatible both with a sphere spinning left- or rightward. But the brain doesn’t represent that. You are given only one consistent interpretation at a time. The fact that it is consistent with both interpretations is reflected by rapid switching (due to many factors, all of which effectively correspond to subtle changes in the input, e.g. eye movements, attention, adaptation, etc.). The point is that all of these perceptual effects are strongly dominated by the immediate inputs, as they presumably result from processes early in the brain.

It is my strong suspicion that ideology works similarly. However, it is not so much anchored in immediate perceptual input, but rather lots and lots of memory. Ultimately previous encodings of experiences. What someone could legitimately call a world view or “Weltanschauung”. Put differently, I think that two people can legitimately look at the same evidence and come to radically different conclusions because they have such different priors, due to a lifetime of overconfident and binary interpretations. So switches are unlikely. Similarly, switches of perceptual interpretations are unlikely in perception if the perceptual inputs are rich and multiple cues agree.